New paper accepted to Decision Support Systems

|

Super excited to share that the paper “Investigating the Impact of Differential Privacy Obfuscation on Users’ Data Disclosure Decisions” by Michael Khavkin and Eran Toch was just accepted to Decision Support Systems. Link to the journal proof: https://doi.org/10.1016/j.dss.2025.114474

The QuestionDifferential Privacy is the gold standard for data anonymization because it provides mathematically provable privacy guarantees for individuals. It has been adopted by companies such as Apple to collect aggregated statistics about app usage, and by government agencies like the U.S. Census Bureau to protect individual privacy when releasing public data. Individuals can often choose whether to share their data, often in exchange for explicit or implicit compensation. However, little is known about how these formal privacy guarantees influence individuals’ willingness to share data or behavior. The studyWe designed two online experiments based on the Prolific platform:

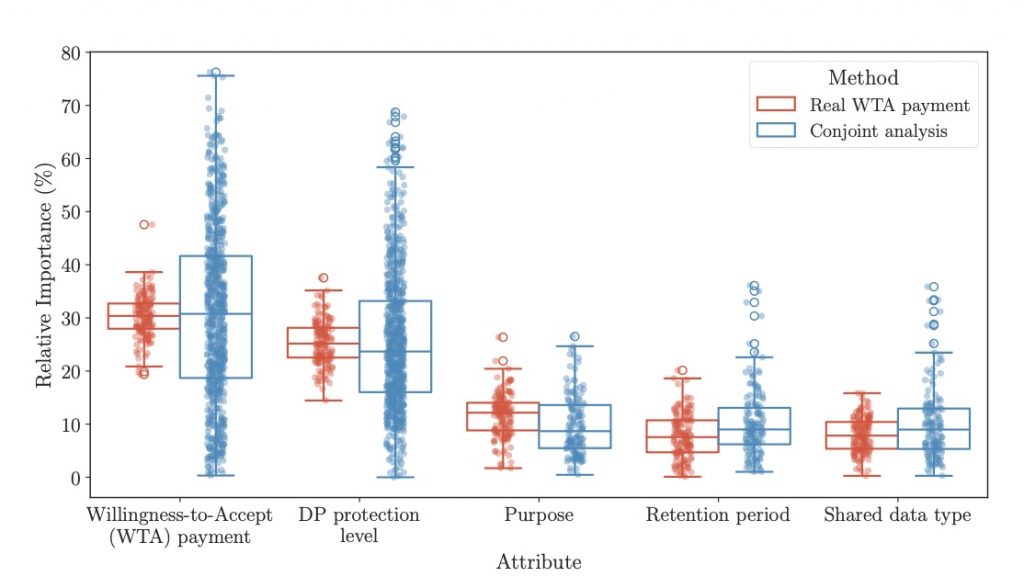

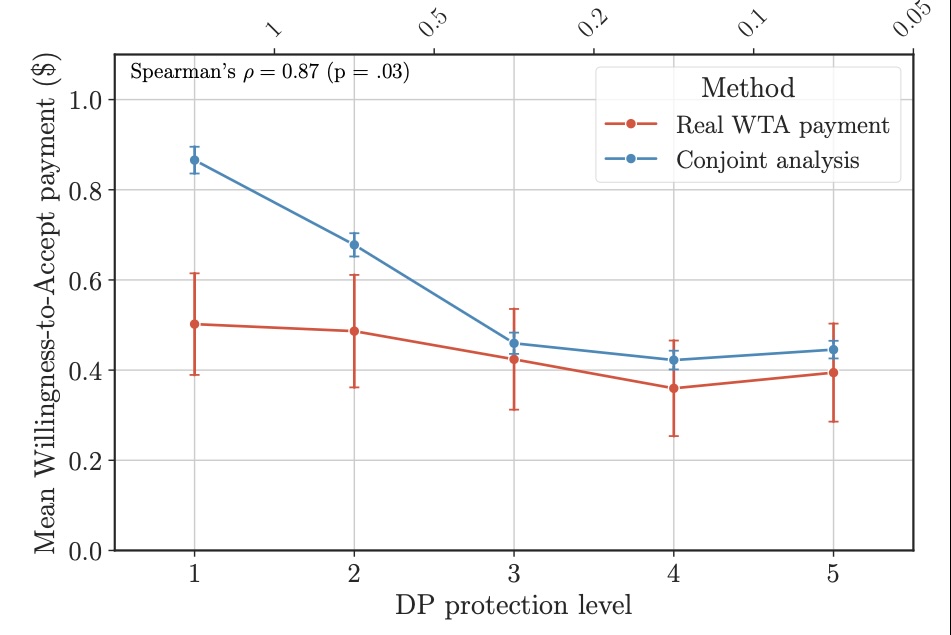

What did we find?Privacy guarantees strongly influenced people’s choices after monetary rewards. On average, an increase of 1 unit in the privacy guarantees resulted in a decrease of 14% in the conjoint preference and 8% in the compensation.

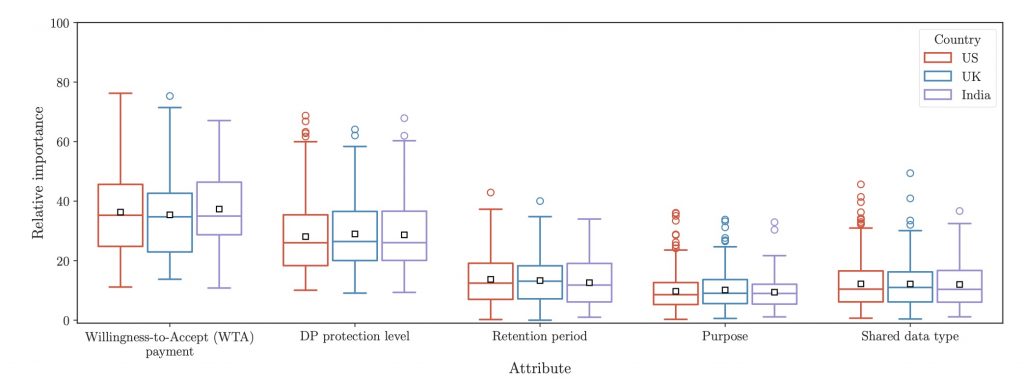

Similar effects were found in both the conjoint analysis and the willingness to accept offers. Still, participants were willing to accept smaller real payments for the same level of privacy protection. The country in which the experiment was carried out didn’t have a significant effect on the results. As in previous studies, people’s privacy behavior is less different than imagined. What does it mean?Our findings suggest that when privacy guarantees are communicated, they can have a real impact on users’ willingness to share data and can help balance privacy protection with compensation costs. The better the privacy, the less compensation people demanded to share their data. We also demonstrate that conjoint analysis is a useful tool for exploring privacy preferences at scale, even if it inflates how much people say they need to be paid.

|