ChatGPT in the Public Eye: Ethical Principles and Generative Concerns in Social Media Discussions

|

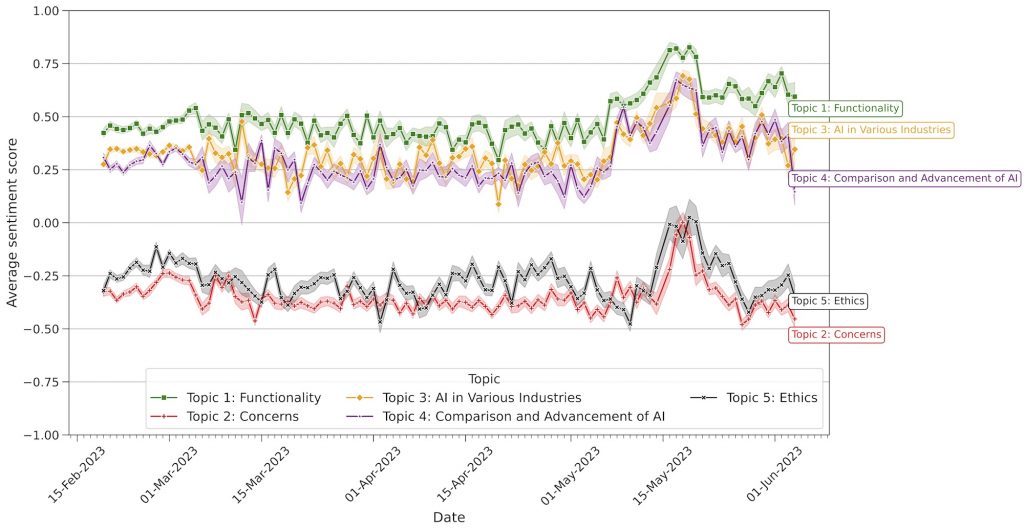

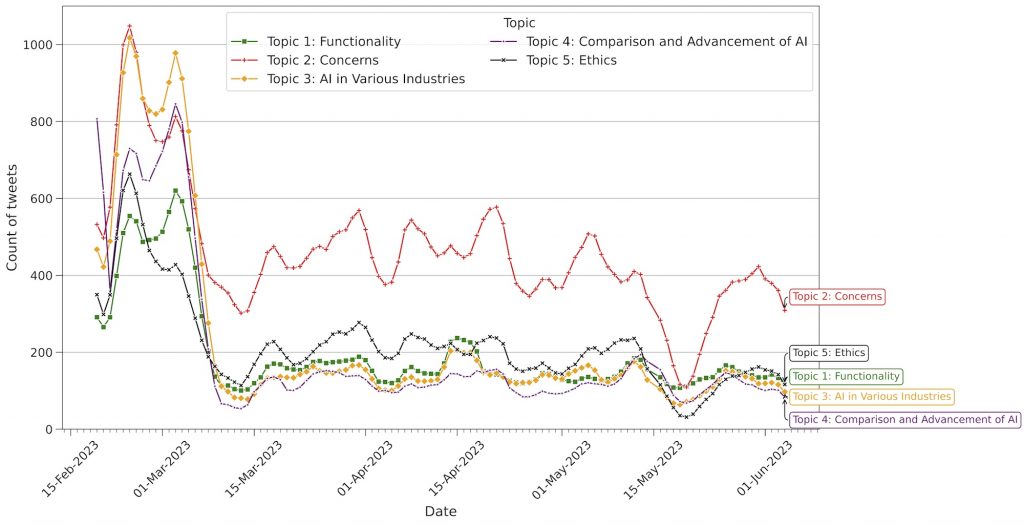

Our recent paper was just published at New Media & Society! “ChatGPT in the Public Eye: Ethical Principles and Generative Concerns in Social Media Discussions”. In the paper, we analyzed 350K tweets in the months following OpenAI’s launch of ChatGPT 3.5. Using unsupervised topic modeling, we identified five main categories of reactions: ChatGPT Functionality, AI uses in industries, Comparison to existing tech, Ethics, and Concerns of AI. The last two were more critical, with negative sentiment analysis changing over the months after the launch. The Ethics cluster is focused on criticism around fake news, algorithmic bias, fairness, and other issues researched by communities such as FaccT Conference, a cluster in which academia is central in constructing the narratives.  The Concerns cluster represents criticism that that’s more diverse, decentralized, and with less academic users. Here, the discourse centers on ChatGPT’s generative and emerging capabilities, such as generating fake citations, the lack of authenticity, or even destroying humanity.  The paper was written in a very unique collaboration between Maayan Cohen, an anthropologist in our group and Dani Movso and Michael Khavkin, who are computer scientists. The access to the paper is open and we also released GPTalyze, a simple API that wraps ChatGPT for analyzing tweets and other short text snippets. Useful for tasks such as sentiment analysis and topic extraction. |