New paper in Information, Communication & Society

|

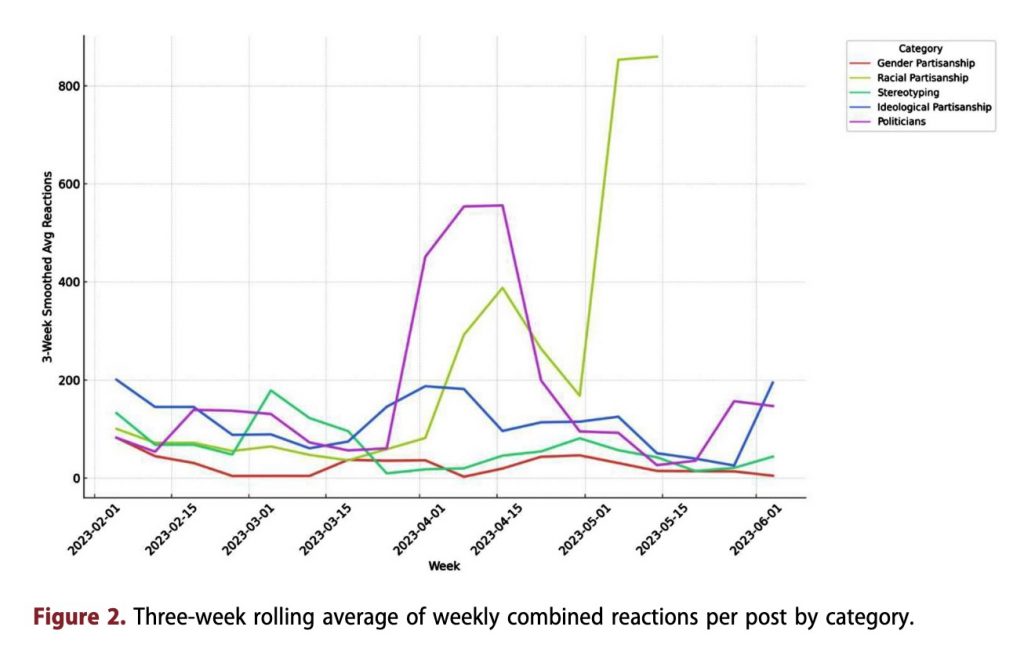

We have a new paper just published in Information, Communication & Society. Maayan Cohen and Eran Toch are looking at how the discourse around algorithmic bias was taken up, amplified, and reframed across ideological lines in the launch of ChatGPT 3.5. The paper can be accessed here. Using a mixed-method approach, combining thematic analysis of high-engagement posts with digital ethnography, we examined how the discourse around algorithmic bias was taken up, amplified, and reframed across ideological lines. First, we found that academic framings of algorithmic bias have shaped public discourse. Many viral posts, often authored by academics, drew on liberal critiques rooted in fairness, representation, and critical theory. Second, we document how conservative users on X adopted the same relational logic common in fairness discourse but redirected the critique to what they perceived as unfair treatment of, well, mostly Trump, but also of right-wing approaches. Our findings suggest that algorithmic bias has become a malleable cultural object, perhaps too malleable. It allows the concept to serve as a tool of ethical accountability, but also makes it vulnerable to ideological inversion. |